👨🎓 About me

I am currently a 2nd-Year Master student at Tsinghua University  . I got B.Eng. degree in Computer Science (Yingcai Honors College) at University of Electronic Science and Technology of China

. I got B.Eng. degree in Computer Science (Yingcai Honors College) at University of Electronic Science and Technology of China  from 2020 to 2024. My current research interest is Generative AI, including Image&Video Generation, human-centric generation and effiicient training methods.

from 2020 to 2024. My current research interest is Generative AI, including Image&Video Generation, human-centric generation and effiicient training methods.

I am looking for a Ph.D. position starting in Fall 2027. I’m always open to connecting and discussing my research interests!🤝🤝🤝

📰 News

- [2025-11-08] Our Paper FilmWeaver is accepted by

AAAI 2026. - [2025-08-24] The code of CanonSwap is released. Welcome to star it.

- [2025-07-26] Our Paper “Human Motion Video Generation: A Survey” is accepted by

TPAMI. - [2025-06-26] Our paper “CanonSwap” is accepted by

ICCV 2025.

📝 Publications

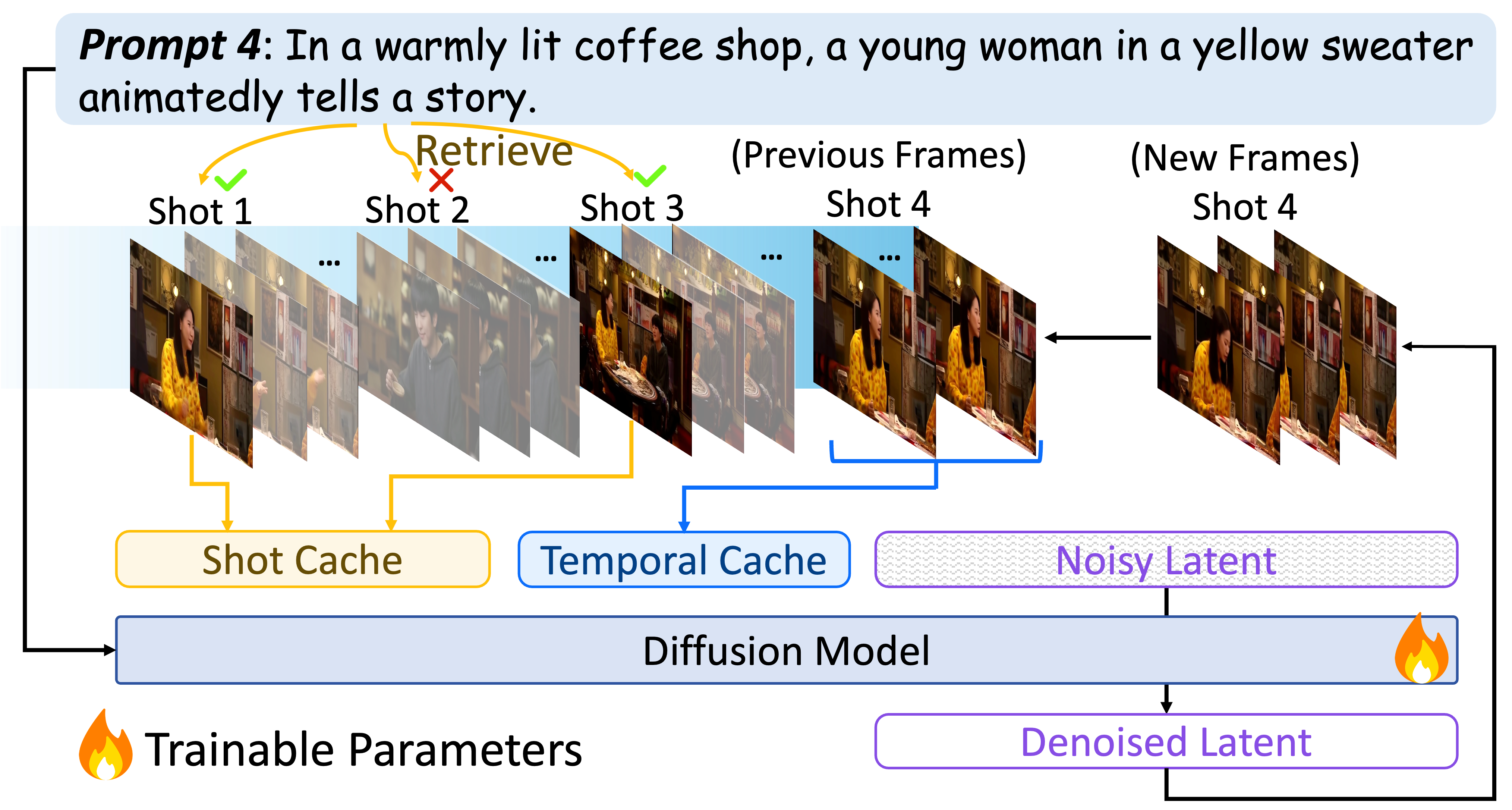

FilmWeaver: Weaving Consistent Multi-Shot Videos with Cache-Guided Autoregressive Diffusion

Xiangyang Luo, Qingyu Li, Xiaokun Liu, Wenyu Qin, Miao Yang, Meng Wang, Pengfei Wan, Di Zhang, Kun Gai, Shao-Lun Huang

Paper Page

- FilmWeaver generates consistent multi-shot videos of arbitrary length via an autoregressive diffusion paradigm, enforcing inter-shot consistency with a Shot Cache and intra-shot coherence with a Temporal Cache, and supports applications such as concept injection and video extension.

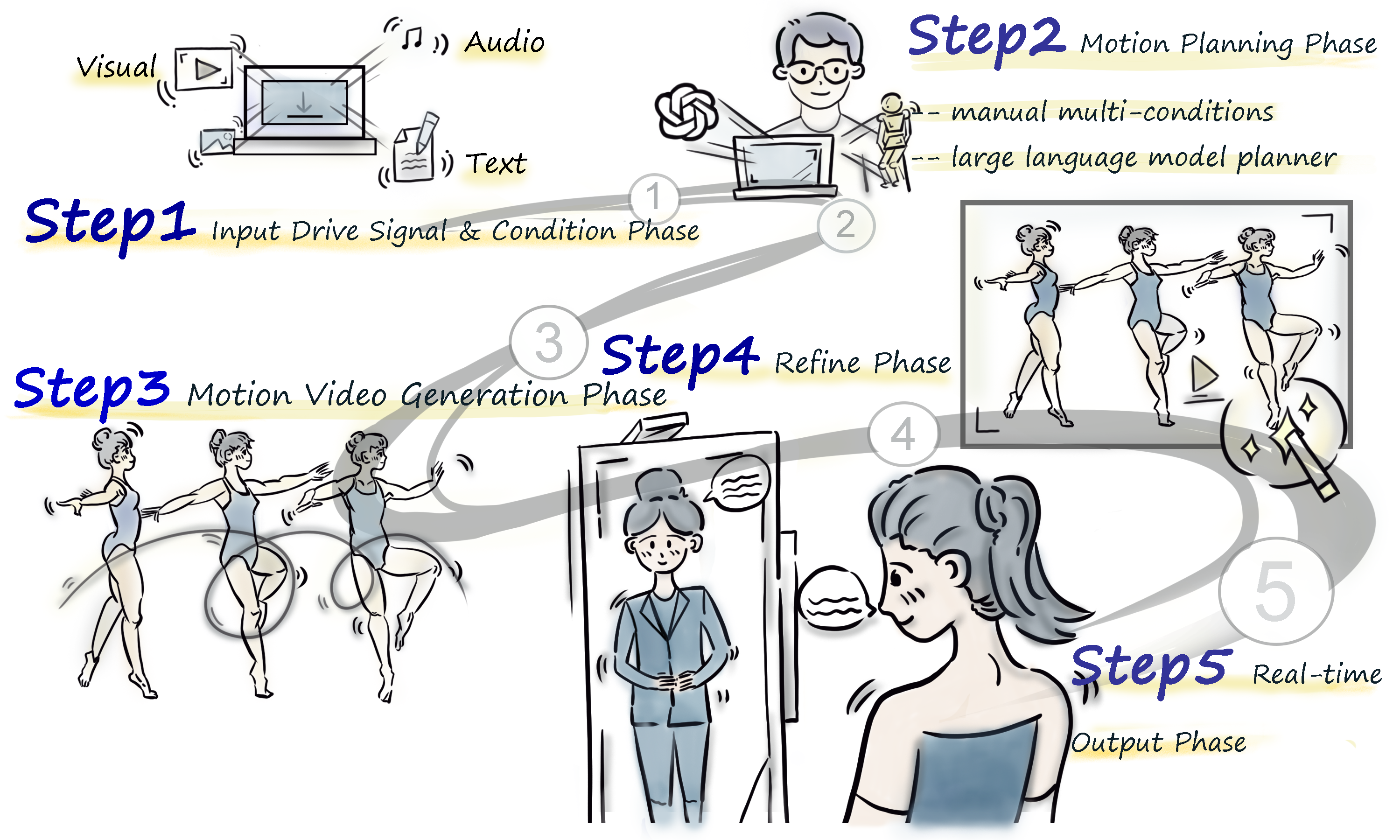

Human Motion Video Generation: A Survey

Haiwei Xue, Xiangyang Luo, Zhanghao Hu, Xin Zhang, Xunzhi Xiang, Yuqin Dai, Jianzhuang Liu, Minglei Li, Jian Yang, Fei Ma, Changpeng Yang, Zonghong Dai, Fei Richard Yu

Paper Page

- This survey provides a comprehensive review of human motion video generation methods, covering the latest techniques, applications, and future directions.

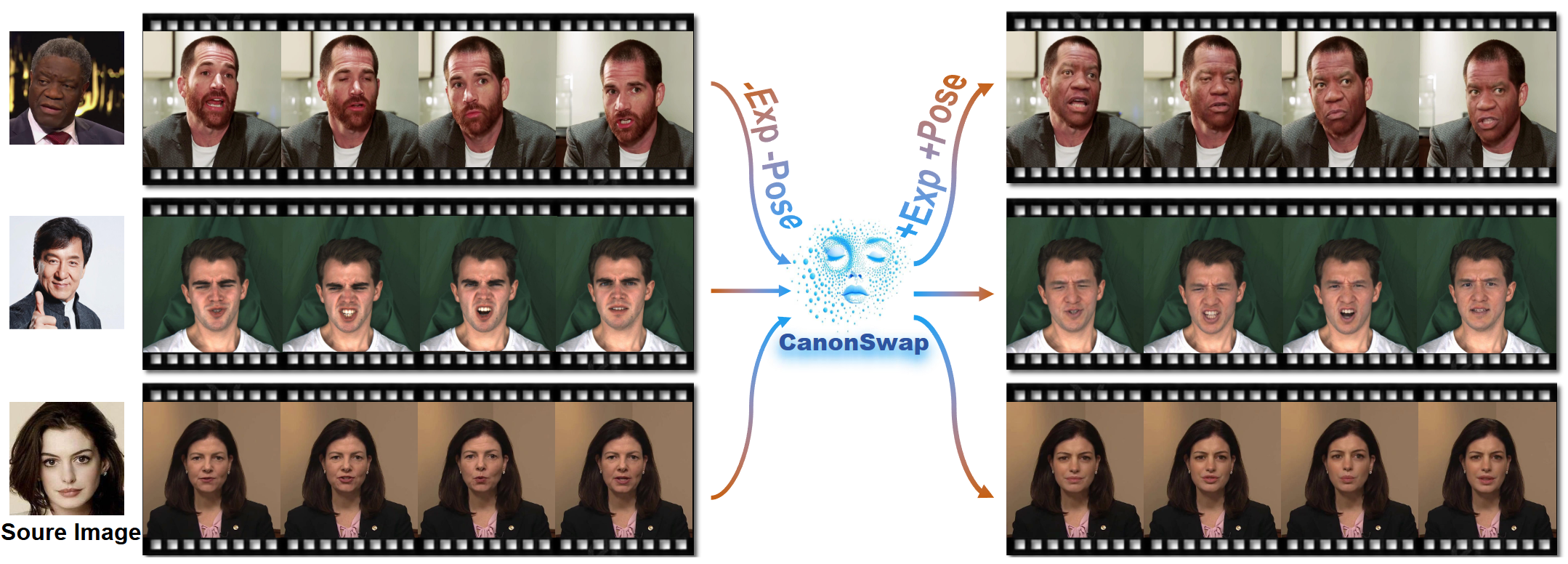

CanonSwap: High-Fidelity and Consistent Video Face Swapping via Canonical Space Modulation

Xiangyang Luo , Ye Zhu†, Yunfei Liu, Lijian Lin, Cong Wan, Zijian Cai, Shao-Lun Huang†, Yu Li

Paper Page Code

- CanonSwap decouples motion information from appearance to enable high-fidelity and consistent video face swapping.

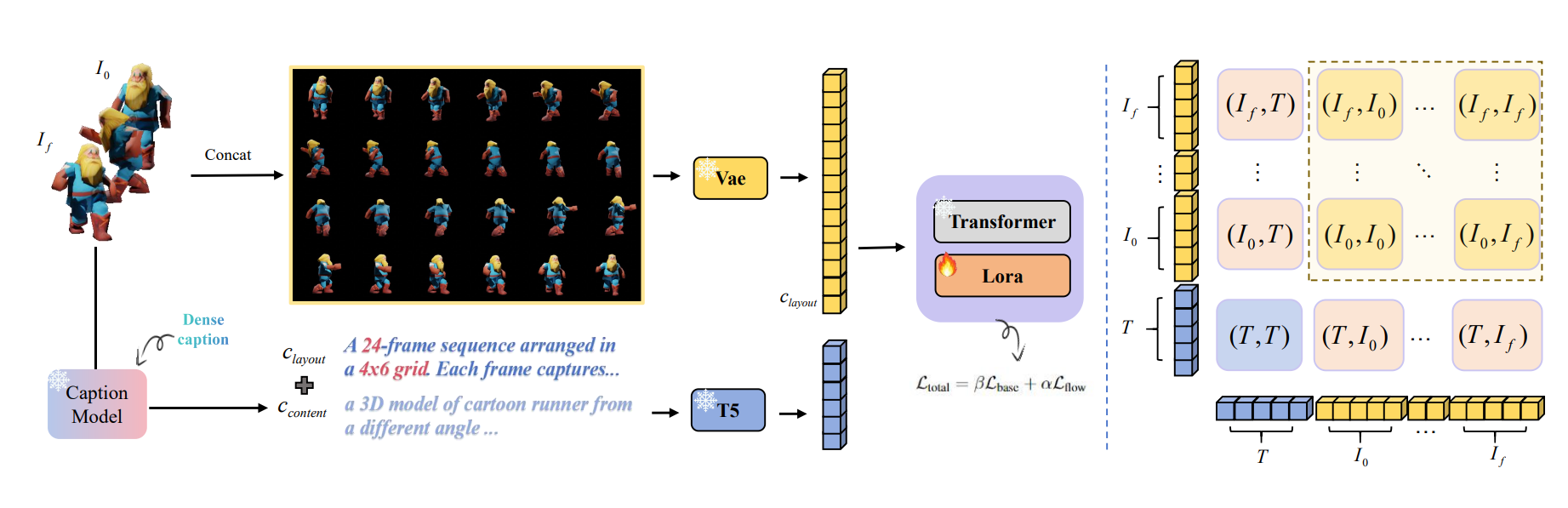

Grid: Omni Visual Generation

Cong Wan*, Xiangyang Luo*, Hao Luo, Zijian Cai, Yiren Song, Yunlong Zhao, Yifan Bai, Fan Wang, Yuhang He, Yihong Gong

PaperCode

- We introduces GRID, an omni-visual generation framework that reformulates temporal tasks like video into grid layouts, enabling a single powerful image model to efficiently handle image, video, and 3D generation.

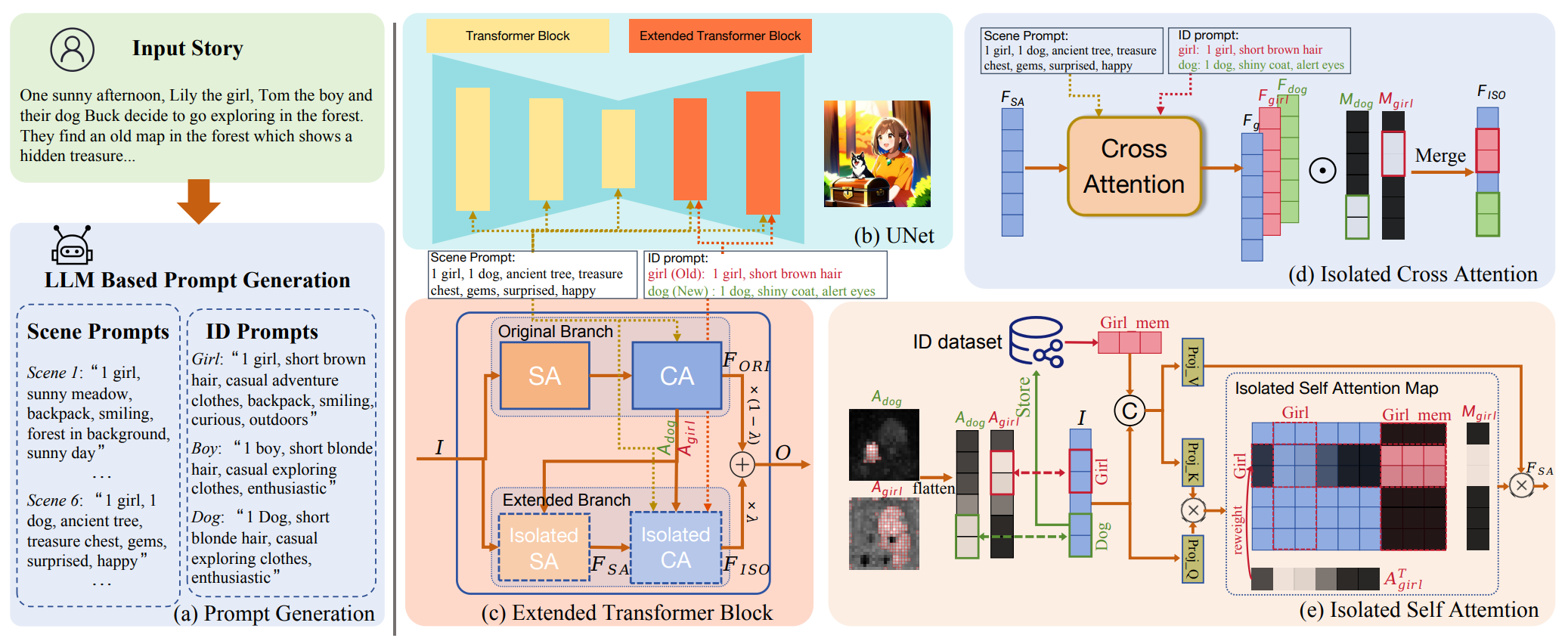

Object Isolated Attention for Consistent Story Visualization

Xiangyang Luo, Junhao Cheng, Yifan Xie, Xin Zhang, Tao Feng, Zhou Liu, Fei Ma†, Fei Yu

Paper

- We proposes a training-free method that uses isolated attention mechanisms to maintain character consistency and prevent feature confusion in story visualization.

CodeSwap: Symmetrically Face Swapping Based on Prior Codebook

Xiangyang Luo, Xin Zhang, Yifan Xie, Xinyi Tong, Weijiang Yu, Heng Chang, Fei Ma†, Fei Ricahrd Yu

Paper

- CodeSwap achieves high-fidelity face swapping by symmetrically manipulating codes within a pre-trained, high-quality facial codebook.